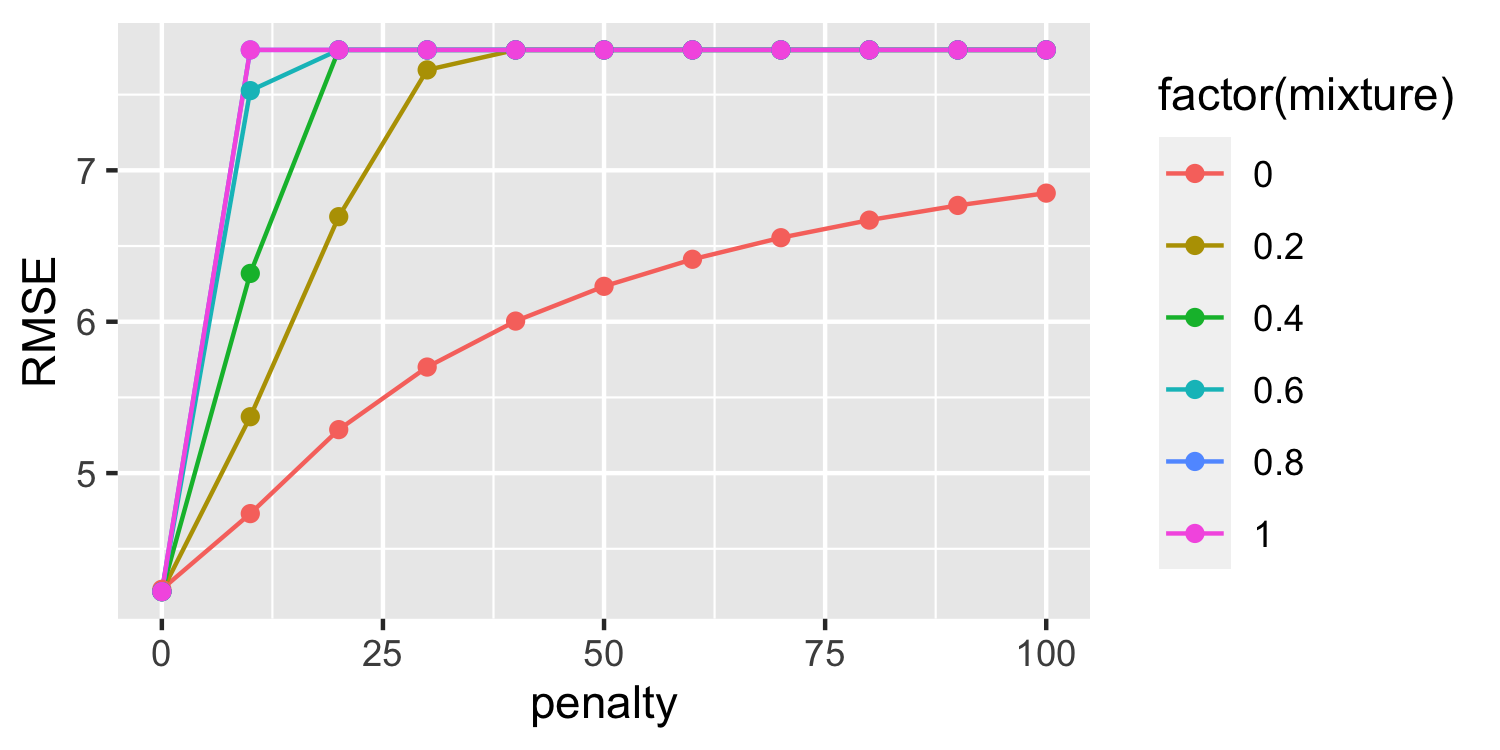

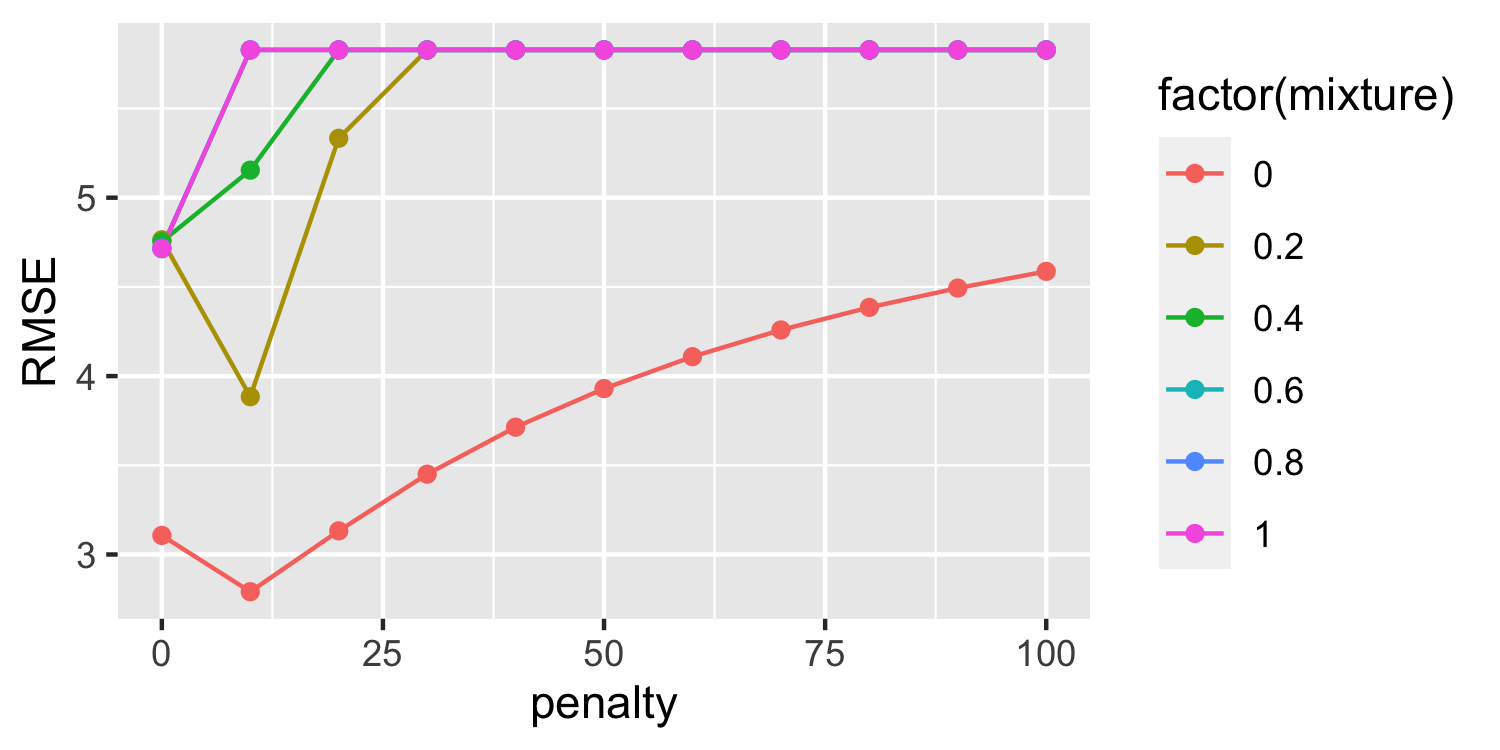

class: center, middle, inverse, title-slide # tidymodels ### Dr. D’Agostino McGowan --- layout: true <div class="my-footer"> <span> Dr. Lucy D'Agostino McGowan <i> adapted from Alison Hill's Introduction to ML with the Tidyverse</i> </span> </div> --- ## tidymodels ```r lm_spec <- linear_reg() %>% # Pick linear regression set_engine(engine = "lm") # set engine lm_spec ``` ``` ## Linear Regression Model Specification (regression) ## ## Computational engine: lm ``` ```r lm_fit <- fit(lm_spec, mpg ~ horsepower, data = Auto) ``` --- ## Validation set approach ```r Auto_split <- initial_split(Auto, prop = 0.5) Auto_split ``` ``` ## <Analysis/Assess/Total> ## <196/196/392> ``` -- * Extract the training and testing data ```r training(Auto_split) testing(Auto_split) ``` --- ## Validation set approach ```r Auto_train <- training(Auto_split) ``` ```r Auto_train ``` .small[ ``` ## # A tibble: 196 x 9 ## mpg cylinders displacement horsepower weight acceleration year origin ## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 18 8 307 130 3504 12 70 1 ## 2 18 8 318 150 3436 11 70 1 ## 3 16 8 304 150 3433 12 70 1 ## 4 17 8 302 140 3449 10.5 70 1 ## 5 15 8 429 198 4341 10 70 1 ## 6 15 8 390 190 3850 8.5 70 1 ## 7 14 8 340 160 3609 8 70 1 ## 8 15 8 400 150 3761 9.5 70 1 ## 9 24 4 113 95 2372 15 70 3 ## 10 22 6 198 95 2833 15.5 70 1 ## # … with 186 more rows, and 1 more variable: name <fct> ``` ] --- ## A faster way! * You can use `last_fit()` and specify the split * This will automatically train the data on the `train` data from the split * Instead of specifying which metric to calculate (with `rmse` as before) you can just use `collect_metrics()` and it will automatically calculate the metrics on the `test` data from the split ```r set.seed(100) Auto_split <- initial_split(Auto, prop = 0.5) lm_fit <- last_fit(lm_spec, mpg ~ horsepower, * split = Auto_split) lm_fit %>% * collect_metrics() ``` ``` ## # A tibble: 2 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 4.87 ## 2 rsq standard 0.625 ``` --- ## What about cross validation? ```r Auto_cv <- vfold_cv(Auto, v = 5) Auto_cv ``` ``` ## # 5-fold cross-validation ## # A tibble: 5 x 2 ## splits id ## <list> <chr> ## 1 <split [313/79]> Fold1 ## 2 <split [313/79]> Fold2 ## 3 <split [314/78]> Fold3 ## 4 <split [314/78]> Fold4 ## 5 <split [314/78]> Fold5 ``` --- ## What if we wanted to do some _preprocessing_ * For the shrinkage methods we discussed it was important to _scale_ the variables -- .question[ What does this mean? ] -- .question[ What would happen if we _scale_ **before** doing cross-validation? Will we get different answers? ] --- ## What if we wanted to do some _preprocessing_ .small[ ```r Auto_scaled <- Auto %>% mutate(horsepower = scale(horsepower)) sd(Auto_scaled$horsepower) ``` ``` ## [1] 1 ``` ```r Auto_cv_scaled <- vfold_cv(Auto_scaled, v = 5) map_dbl(Auto_cv_scaled$splits, function(x) { dat <- as.data.frame(x)$horsepower sd(dat) }) ``` ``` ## [1] 1.0115202 1.0025849 0.9834936 0.9733806 1.0293404 ``` ] --- ## What if we wanted to do some _preprocessing_ * `recipe()`! -- * Using the `recipe()` function along with `step_*()` functions, we can specify _preprocessing_ steps and R will automagically apply them to each fold appropriately. -- ```r rec <- recipe(mpg ~ horsepower, data = Auto) %>% * step_scale(horsepower) ``` -- * You can find all of the potential preprocessing **steps** here: https://tidymodels.github.io/recipes/reference/index.html --- ## Where do we plug in this recipe? * The `recipe` gets plugged into the `fit_resamples()` function -- ```r Auto_cv <- vfold_cv(Auto, v = 5) rec <- recipe(mpg ~ horsepower, data = Auto) %>% step_scale(horsepower) results <- fit_resamples(lm_spec, preprocessor = rec, resamples = Auto_cv) results %>% collect_metrics() ``` ``` ## # A tibble: 2 x 5 ## .metric .estimator mean n std_err ## <chr> <chr> <dbl> <int> <dbl> ## 1 rmse standard 4.88 5 0.317 ## 2 rsq standard 0.613 5 0.0249 ``` --- ## What if we want to predict mpg with more variables * Now we still want to add a step to _scale_ predictors * We could either write out all predictors individually to scale them -- * OR we could use the `all_predictors()` short hand. -- ```r rec <- recipe(mpg ~ horsepower + displacement + weight, data = Auto) %>% step_scale(all_predictors()) ``` --- ## Putting it together ```r rec <- recipe(mpg ~ horsepower + displacement + weight, data = Auto) %>% step_scale(all_predictors()) results <- fit_resamples(lm_spec, preprocessor = rec, resamples = Auto_cv) results %>% collect_metrics() ``` ``` ## # A tibble: 2 x 5 ## .metric .estimator mean n std_err ## <chr> <chr> <dbl> <int> <dbl> ## 1 rmse standard 4.22 5 0.272 ## 2 rsq standard 0.709 5 0.0153 ``` --- ## Ridge, Lasso, and Elastic net * When specifying your model, you can indicate whether you would like to use ridge, lasso, or elastic net. We can write a general equation to minimize: `$$RSS + \lambda\left((1-\alpha)\sum_{i=1}^p\beta_j^2+\alpha\sum_{i=1}^p|\beta_j|\right)$$` -- ```r lm_spec <- linear_reg() %>% * set_engine("glmnet") ``` * First specify the engine. We'll use `glmnet` -- * The `linear_reg()` function has two additional parameters, `penalty` and `mixture` -- * `penalty` is `\(\lambda\)` from our equation. -- * `mixture` is a number between 0 and 1 representing `\(\alpha\)` --- ## Ridge, Lasso, and Elastic net `$$RSS + \lambda\left((1-\alpha)\sum_{i=1}^p\beta_j^2+\alpha\sum_{i=1}^p|\beta_j|\right)$$` .question[ What would we set `mixture` to in order to perform Ridge regression? ] -- .small[ ```r *ridge_spec <- linear_reg(penalty = 100, mixture = 0) %>% set_engine("glmnet") ``` ] --- ## Ridge, Lasso, and Elastic net `$$RSS + \lambda\left((1-\alpha)\sum_{i=1}^p\beta_j^2+\alpha\sum_{i=1}^p|\beta_j|\right)$$` .small[ ```r *ridge_spec <- linear_reg(penalty = 100, mixture = 0) %>% set_engine("glmnet") ``` ] -- .small[ ```r *lasso_spec <- linear_reg(penalty = 5, mixture = 1) %>% set_engine("glmnet") ``` ] -- .small[ ```r *enet_spec <- linear_reg(penalty = 60, mixture = 0.7) %>% set_engine("glmnet") ``` ] --- ## Okay, but we wanted to look at 3 different models! .small[ ```r ridge_spec <- linear_reg(penalty = 100, mixture = 0) %>% set_engine("glmnet") results <- fit_resamples(ridge_spec, preprocessor = rec, resamples = Auto_cv) ``` ] -- .small[ ```r lasso_spec <- linear_reg(penalty = 5, mixture = 1) %>% set_engine("glmnet") results <- fit_resamples(lasso_spec, preprocessor = rec, resamples = Auto_cv) ``` ] --- .small[ ```r elastic_spec <- linear_reg(penalty = 60, mixture = 0.7) %>% set_engine("glmnet") results <- fit_resamples(elastic_spec, preprocessor = rec, resamples = Auto_cv) ``` ] -- * 😱 this looks like copy + pasting! --- ## tune 🎶 ```r *penalty_spec <- linear_reg(penalty = tune(), mixture = tune()) %>% set_engine("glmnet") ``` * Notice the code above has `tune()` for the the penalty and the mixture. Those are the things we want to vary! --- ## tune 🎶 * Now we need to create a grid of potential penalties ( `\(\lambda\)` ) and mixtures ( `\(\alpha\)` ) that we want to test * Instead of `fit_resamples()` we are going to use `tune_grid()` ```r grid <- expand_grid(penalty = seq(0, 100, by = 10), mixture = seq(0, 1, by = 0.2)) results <- tune_grid(penalty_spec, preprocessor = rec, * grid = grid, resamples = Auto_cv) ``` --- ## tune 🎶 ```r results %>% collect_metrics() ``` ``` ## # A tibble: 132 x 7 ## penalty mixture .metric .estimator mean n std_err ## <dbl> <dbl> <chr> <chr> <dbl> <int> <dbl> ## 1 0 0 rmse standard 4.23 5 0.280 ## 2 0 0 rsq standard 0.708 5 0.0166 ## 3 0 0.2 rmse standard 4.22 5 0.273 ## 4 0 0.2 rsq standard 0.709 5 0.0154 ## 5 0 0.4 rmse standard 4.22 5 0.273 ## 6 0 0.4 rsq standard 0.709 5 0.0154 ## 7 0 0.6 rmse standard 4.22 5 0.273 ## 8 0 0.6 rsq standard 0.709 5 0.0154 ## 9 0 0.8 rmse standard 4.22 5 0.273 ## 10 0 0.8 rsq standard 0.709 5 0.0153 ## # … with 122 more rows ``` --- ## Subset results ```r results %>% collect_metrics() %>% filter(.metric == "rmse") %>% arrange(mean) ``` ``` ## # A tibble: 66 x 7 ## penalty mixture .metric .estimator mean n std_err ## <dbl> <dbl> <chr> <chr> <dbl> <int> <dbl> ## 1 0 0.2 rmse standard 4.22 5 0.273 ## 2 0 0.6 rmse standard 4.22 5 0.273 ## 3 0 0.4 rmse standard 4.22 5 0.273 ## 4 0 0.8 rmse standard 4.22 5 0.273 ## 5 0 1 rmse standard 4.22 5 0.273 ## 6 0 0 rmse standard 4.23 5 0.280 ## 7 10 0 rmse standard 4.73 5 0.308 ## 8 20 0 rmse standard 5.29 5 0.313 ## 9 10 0.2 rmse standard 5.37 5 0.316 ## 10 30 0 rmse standard 5.70 5 0.314 ## # … with 56 more rows ``` * Since this is a data frame, we can do things like filter and arrange! -- .question[ Which would you choose? ] --- ```r results %>% collect_metrics() %>% filter(.metric == "rmse") %>% ggplot(aes(penalty, mean, color = factor(mixture), group = factor(mixture))) + geom_line() + geom_point() + labs(y = "RMSE") ``` <!-- --> --- <!-- --> --- ## Putting it all together * Often we can use a combination of all of these tools together * First split our data * Do cross validation on _just the training data_ to tune the parameters * Use `last_fit()` with the selected parameters, specifying the split data so that it is evaluated on the left out test sample --- ## Putting it all together .small[ ```r auto_split <- initial_split(Auto, prop = 0.5) auto_train <- training(auto_split) auto_cv <- vfold_cv(auto_train, v = 5) rec <- recipe(mpg ~ horsepower + displacement + weight, data = auto_train) %>% step_scale(all_predictors()) tuning <- tune_grid(penalty_spec, rec, grid = grid, resamples = auto_cv) tuning %>% collect_metrics() %>% filter(.metric == "rmse") %>% arrange(mean) ``` ``` ## # A tibble: 66 x 7 ## penalty mixture .metric .estimator mean n std_err ## <dbl> <dbl> <chr> <chr> <dbl> <int> <dbl> ## 1 0 0 rmse standard 4.48 5 0.195 ## 2 0 1 rmse standard 4.49 5 0.223 ## 3 0 0.8 rmse standard 4.49 5 0.223 ## 4 0 0.6 rmse standard 4.49 5 0.223 ## 5 0 0.4 rmse standard 4.51 5 0.228 ## 6 0 0.2 rmse standard 4.51 5 0.228 ## 7 10 0 rmse standard 4.90 5 0.170 ## 8 20 0 rmse standard 5.44 5 0.203 ## 9 10 0.2 rmse standard 5.52 5 0.216 ## 10 30 0 rmse standard 5.84 5 0.228 ## # … with 56 more rows ``` ] --- ## Putting it all together .small[ ```r final_spec <- linear_reg(penalty = 0, mixture = 0) %>% set_engine("glmnet") *fit <- last_fit(final_spec, rec, * split = auto_split) fit %>% collect_metrics() ``` ``` ## # A tibble: 2 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 4.07 ## 2 rsq standard 0.714 ``` ]